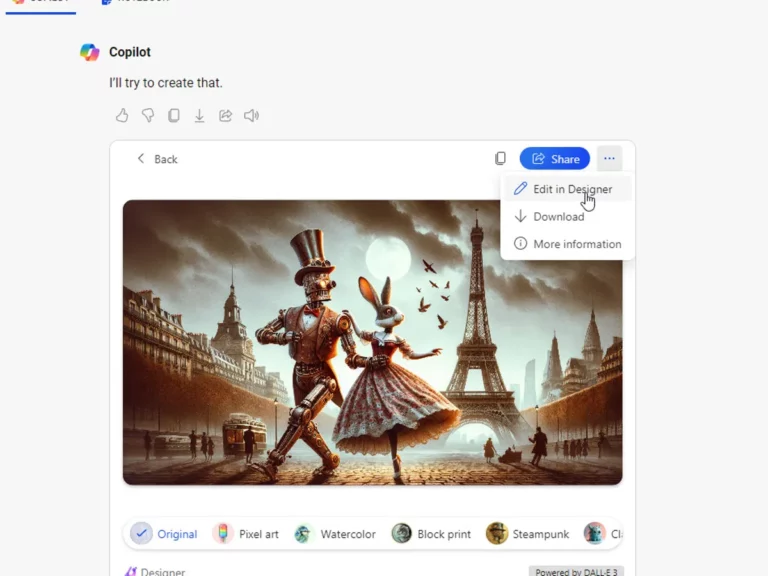

A Microsoft whistleblower reveals significant flaws in Copilot’s image generation capabilities, expressing frustration over the lack of action taken by the company. Shane Jones, an AI engineering manager, disclosed his concerns to Microsoft’s board and the FTC, highlighting systemic issues with Copilot’s text-to-image tool. Despite Jones’ efforts to address safety concerns, neither Microsoft nor OpenAI has taken adequate steps to address the vulnerabilities.

Jones discovered vulnerabilities in Copilot’s image generation, including the unintentional depiction of sexually objectifying imagery and inappropriate content such as teenagers with assault rifles. Despite reporting these issues internally, Jones received no satisfactory response. When he raised his concerns with Microsoft, he was directed to OpenAI but received no response. Instead, he was met with legal demands to remove his public disclosure.

The whistleblower’s efforts to ensure the safety of Copilot’s users have been met with resistance, indicating a lack of accountability

The whistleblower’s efforts to ensure the safety of Copilot’s users have been met with resistance, indicating a lack of accountability within the companies involved.

The comparison between Copilot’s intrusive presence in Windows 11 and the infamous Clippy draws attention to ongoing concerns regarding AI image generation. Shane Jones, dissatisfied with Microsoft’s response to his safety concerns, alleges a lack of direct communication from the company’s legal team. Despite his efforts to escalate the matter to lawmakers, Jones feels that Microsoft has not adequately addressed the vulnerabilities he identified.

In response, Microsoft emphasizes its commitment to addressing employee concerns and enhancing the safety of its technology. However, Jones remains skeptical, highlighting the lack of appropriate reporting tools within the company to communicate potential problems with AI products like Copilot.

This contrast with Google’s proactive response to similar issues with Gemini’s image generation capabilities underscores the need for greater transparency and accountability in the AI industry

This contrast with Google’s proactive response to similar issues with Gemini’s image generation capabilities underscores the need for greater transparency and accountability in the AI industry. Jones stresses the importance of Microsoft demonstrating a commitment to AI safety and transparency to stakeholders and society, urging the company to invest in the infrastructure needed to prevent future incidents.

Jones’ efforts to hold Microsoft accountable for AI safety reflect broader concerns about the ethical implications of AI advancement and the need for robust oversight mechanisms .

Comments are closed