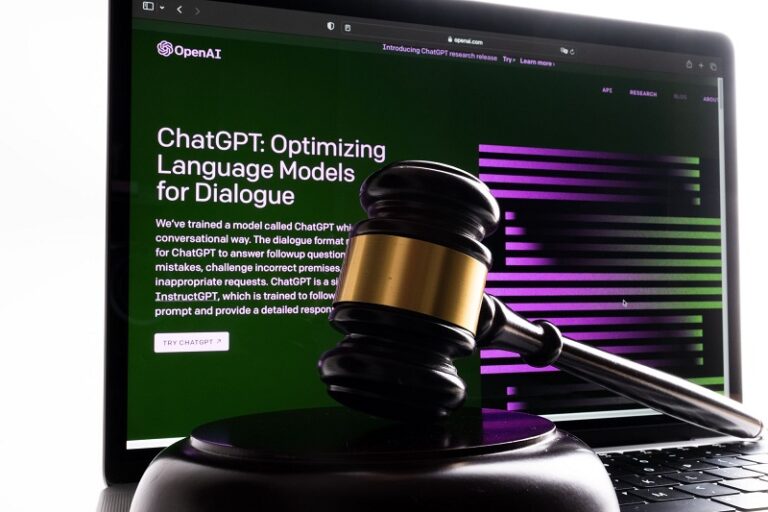

In a groundbreaking move, privacy activist group noyb (None of Your Business) has filed a complaint against OpenAI, alleging that its revolutionary ChatGPT service violates General Data Protection Regulation (GDPR) rules. The complaint, filed with the Austrian data protection authority, claims that ChatGPT’s inability to correct inaccurate information puts it at odds with EU law.

The complaint centers around ChatGPT’s response to a request for a public figure’s date of birth. Despite the information being unavailable online, ChatGPT inferred an incorrect date. When the subject requested the data be erased, noyb alleges that OpenAI had no mechanism to correct the false information, only the option to “hide” it.

GDPR law is clear: personal data must be accurate, and individuals have the right to request corrections. Maartje de Graaf, data protection lawyer at noyb, emphasizes the gravity of the situation, stating, “Making up false information is quite problematic… If a system cannot produce accurate and transparent results, it cannot be used to generate data about individuals.”

This is not the first time AI models have clashed with privacy laws. A 2023 paper highlighted the issue, and Large Language Models like ChatGPT have struggled with compliance due to their processing and storage methods. Italy previously imposed a temporary restriction on ChatGPT over data privacy concerns.

The complaint may lead to a fine of up to 4% of OpenAI’s global turnover, depending on the severity of the breach. noyb expects the sanction to be “effective, proportionate, and dissuasive.” OpenAI has yet to respond to the complaint.

This case sets a precedent for AI companies, emphasizing the need for accurate and transparent data processing. As AI technology advances, it must adapt to legal requirements, ensuring compliance with data protection laws. The outcome of this complaint will shape the future of AI and data privacy, potentially influencing the development of AI models and their handling of personal data.